On April 17, 2019, I gave a talk at the Digital Public Library of America conference (DPLAfest). This is the transcript of that talk.

I love the librarian community. You all are deeply committed to producing, curating, and enabling access to knowledge. Many of you embraced the internet with glee, recognizing the potential to help so many more people access critical information. Many of you also saw the democratic and civic potential of this new technology, not to mention the importance of an informed citizenry in a democratic world. Yet, slowly, and systematically, a virus has spread, using technology to systematically tear at the social fabric of public life.

This shouldn’t be surprising. After all, most of Silicon Valley in the late 90s and early aughts was obsessed with Neal Stephenson’s Snow Crash. How did they not recognize that this book was dystopian?

Slowly, and systematically, a virus has spread, using technology to systematically tear at the social fabric of public life.

Epistemology is the term that describes how we know what we know. Most people who think about knowledge think about the processes of obtaining it. Ignorance is often assumed to be not-yet-knowledgeable. But what if ignorance is strategically manufactured? What if the tools of knowledge production are perverted to enable ignorance? In 1995, Robert Proctor and Iain Boal coined the term “agnotology” to describe the strategic and purposeful production of ignorance. In an edited volume called Agnotology, Proctor and Londa Schiebinger collect essays detailing how agnotology is achieved. Whether we’re talking about the erasure of history or the undoing of scientific knowledge, agnotology is a tool of oppression by the powerful.

Swirling all around us are conversations about how social media platforms must get better at content management. Last week, Congress held hearings on the dynamics of white supremacy online and the perception that technology companies engage in anti-conservative bias. Many people who are steeped in history and committed to evidence-based decision-making are experiencing a collective sense of being gaslit—the concept that emerges from a film on domestic violence to explain how someone’s sense of reality can be intentionally destabilized by an abuser. How do you process a black conservative commentator testifying before the House that the Southern strategy never happened and that white nationalism is an invention of the Democrats to “scare black people”? Keep in mind that this commentator was intentionally trolled by the terrorist in Christchurch; she responded to this atrocity with tweets containing “LOL” and “HAHA.” Speaking of Christchurch, let’s talk about Christchurch. We all know the basic narrative. A terrorist espousing white nationalist messages livestreamed himself brutally murdering 50 people worshipping in a New Zealand mosque. The video was framed like a first-person shooter from a video game. Beyond the atrocity itself, what else was happening?

He produced a media spectacle. And he learned how to do it by exploiting the information ecosystem we’re currently in.

This terrorist understood the vulnerabilities of both social media and news media. The message he posted on 8chan announcing his intention included links to his manifesto and other sites, but it did not include a direct link to Facebook; he didn’t want Facebook to know that the traffic came from 8chan. The video included many minutes of him driving around, presumably to build audience but also, quite likely, in an effort to evade any content moderators that might be looking. He titled his manifesto with a well-known white nationalist call sign, knowing that the news media would cover the name of the manifesto, which in turn, would prompt people to search for that concept. And when they did, they’d find a treasure trove of anti-Semitic and white nationalist propaganda. This is the exploitation of what’s called a “data void.” He also trolled numerous people in his manifesto, knowing full well that the media would shine a spotlight on them and create distractions and retractions and more news cycles. He produced a media spectacle. And he learned how to do it by exploiting the information ecosystem we’re currently in. Afterwards, every social platform was inundated with millions and millions of copies and alterations of the video uploaded through a range of fake accounts, either to burn the resources of technology companies, shame them, or test their guardrails for future exploits.

What’s most notable about this terrorist is that he’s explicit in his white nationalist commitments. Most of those who are propagating white supremacist logics are not. Whether we’re talking about the so-called “alt-right” who simply ask questions like “Are jews people?” or the range of people who argue online for racial realism based on long-debunked fabricated science, there’s an increasing number of people who are propagating conspiracy theories or simply asking questions as a way of enabling and magnifying white supremacy. This is agnotology at work.

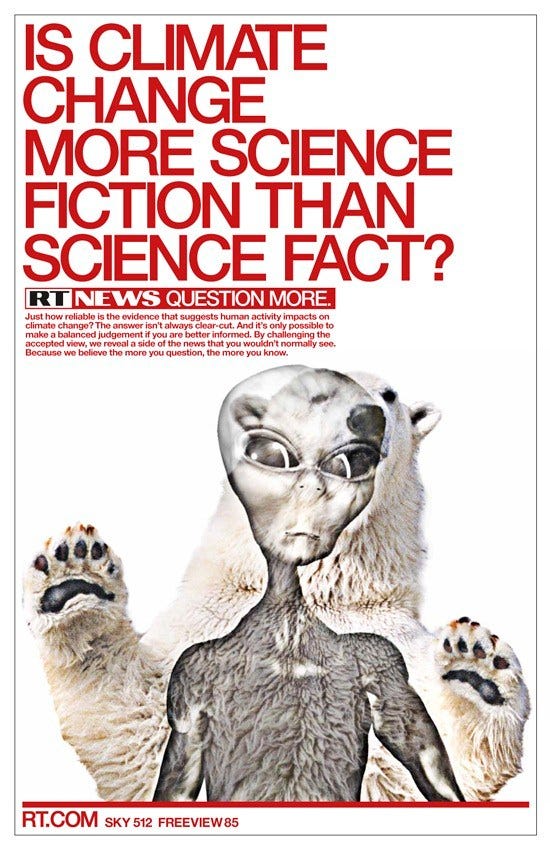

What’s at stake right now is not simply about hate speech vs. free speech or the role of state-sponsored bots in political activity. It’s much more basic. It’s about purposefully and intentionally seeding doubt to fragment society. To fragment epistemologies. This is a tactic that was well-honed by propagandists. Consider this Russia Today poster.

But what’s most profound is how it’s being done en masse now. Teenagers aren’t only radicalized by extreme sites on the web. It now starts with a simple YouTube query. Perhaps you’re a college student trying to learn a concept like “social justice” that you’ve heard in a classroom. The first result you encounter is from PragerU, a conservative organization that is committed to undoing so-called “leftist” ideas that are taught at universities. You watch the beautifully produced video, which promotes many of the tenets of media literacy. Ask hard questions. Follow the money. The video offers a biased and slightly conspiratorial take on what “social justice” is, suggesting that it’s not real, but instead a manufactured attempt to suppress you. After you watch this, you watch more videos of this kind from people who are professors and other apparent experts. This all makes you think differently about this term in your reading. You ask your professor a question raised by one of the YouTube influencers. She reacts in horror and silences you. The videos all told you to expect this. So now you want to learn more. You go deeper into a world of people who are actively anti-“social justice warriors.” You’re introduced to anti-feminism and racial realism. How far does the rabbit hole go?

One of the best ways to seed agnotology is to make sure that doubtful and conspiratorial content is easier to reach than scientific material.

YouTube is the primary search engine for people under 25. It’s where high school and college students go to do research. Digital Public Library of America works with many phenomenal partners who are all working to curate and make available their archives. Yet, how much of that work is available on YouTube? Most of DPLA’s partners want their content on their site. They want to be a destination site that people visit. Much of this is visual and textual, but are there explainers made about this content that are on YouTube? How many scientific articles have video explainers associated with them?

Herein lies the problem. One of the best ways to seed agnotology is to make sure that doubtful and conspiratorial content is easier to reach than scientific material. And then to make sure that what scientific information is available, is undermined. One tactic is to exploit “data voids.” These are areas within a search ecosystem where there’s no relevant data; those who want to manipulate media purposefully exploit these. Breaking news is one example of this. Another is to co-opt a term that was left behind, like social justice. But let me offer you another. Some terms are strategically created to achieve epistemological fragmentation. In the 1990s, Frank Luntz was the king of doing this with terms like partial-birth abortion, climate change, and death tax. Every week, he coordinated congressional staffers and told them to focus on the term of the week and push it through the news media. All to create a drumbeat.

Illustration by Jim Cooke Today’s drumbeat happens online. The goal is no longer just to go straight to the news media. It’s to first create a world of content and then to push the term through to the news media at the right time so that people search for that term and receive specific content. Terms like caravan, incel, crisis actor. By exploiting the data void, or the lack of viable information, media manipulators can help fragment knowledge and seed doubt.

Media manipulators are also very good at messing with structure. Yes, they optimize search engines, just like marketers. But they also look to create networks that are hard to undo. YouTube has great scientific videos about the value of vaccination, but countless anti-vaxxers have systematically trained YouTube to make sure that people who watch the Center for Disease Control and Prevention’s videos also watch videos asking questions about vaccinations or videos of parents who are talking emotionally about what they believe to be the result of vaccination. They comment on both of these videos, they watch them together, they link them together. This is the structural manipulation of media. Journalists often get caught up in telling “both sides,” but the creation of sides is a political project.

The creation of sides is a political project.

And this is where you come in. You all believe in knowledge. You believe in making sure the public is informed. You understand that knowledge emerges out of contestation, debate, scientific pursuit, and new knowledge replacing old knowledge. Scholars are obsessed with nuance. Producers of knowledge are often obsessed with credit and ownership. All of this is being exploited to undo knowledge today. You will not achieve an informed public simply by making sure that high quality content is publicly available and presuming that credibility is enough while you wait for people to come find it. You have to understand the networked nature of the information war we’re in, actively be there when people are looking, and blanket the information ecosystem with the information people need to make informed decisions.

Thank you!