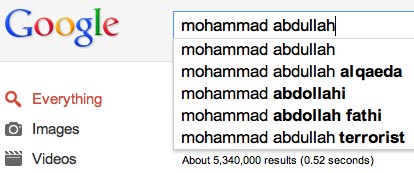

You’re a 16-year-old Muslim kid in America. Say your name is Mohammad Abdullah. Your schoolmates are convinced that you’re a terrorist. They keep typing in Google queries likes “is Mohammad Abdullah a terrorist?” and “Mohammad Abdullah al Qaeda.” Google’s search engine learns. All of a sudden, auto-complete starts suggesting terms like “Al Qaeda” as the next term in relation to your name. You know that colleges are looking up your name and you’re afraid of the impression that they might get based on that auto-complete. You are already getting hostile comments in your hometown, a decidedly anti-Muslim environment. You know that you have nothing to do with Al Qaeda, but Google gives the impression that you do. And people are drawing that conclusion. You write to Google but nothing comes of it. What do you do?

You’re a 16-year-old Muslim kid in America. Say your name is Mohammad Abdullah. Your schoolmates are convinced that you’re a terrorist. They keep typing in Google queries likes “is Mohammad Abdullah a terrorist?” and “Mohammad Abdullah al Qaeda.” Google’s search engine learns. All of a sudden, auto-complete starts suggesting terms like “Al Qaeda” as the next term in relation to your name. You know that colleges are looking up your name and you’re afraid of the impression that they might get based on that auto-complete. You are already getting hostile comments in your hometown, a decidedly anti-Muslim environment. You know that you have nothing to do with Al Qaeda, but Google gives the impression that you do. And people are drawing that conclusion. You write to Google but nothing comes of it. What do you do?

This is guilt through algorithmic association. And while this example is not a real case, I keep hearing about real cases. Cases where people are algorithmically associated with practices, organizations, and concepts that paint them in a problematic light even though there’s nothing on the web that associates them with that term. Cases where people are getting accused of affiliations that get produced by Google’s auto-complete. Reputation hits that stem from what people _search_ not what they _write_.

It’s one thing to be slandered by another person on a website, on a blog, in comments. It’s another to have your reputation slandered by computer algorithms. The algorithmic associations do reveal the attitudes and practices of people, but those people are invisible; all that’s visible is the product of the algorithm, without any context of how or why the search engine conveyed that information. What becomes visible is the data point of the algorithmic association. But what gets interpreted is the “fact” implied by said data point, and that gives an impression of guilt. The damage comes from creating the algorithmic association. It gets magnified by conveying it.

- What are the consequences of guilt through algorithmic association?

- What are the correction mechanisms?

- Who is accountable?

- What can or should be done?

Note: The image used here is Photoshopped. I did not use real examples so as to protect the reputations of people who told me their story.

Update: Guilt through algorithmic association is not constrained to Google. This is an issue for any and all systems that learn from people and convey collective “intelligence” back to users. All of the examples that I was given from people involved Google because Google is the dominant search engine. I’m not blaming Google. Rather, I think that this is a serious issue for all of us in the tech industry to consider. And the questions that I’m asking are genuine questions, not rhetorical ones.

It would be interesting to see (and might get a reaction) if you could generate this effect on some high profile people, e.g. by starting a campaign to have lots of people google “larry page embezzlement” or some such….

Privacy and security violations of everyday life happen all the time. It’s just that they usually happen in ‘edge cases’ or to non-mainstream people. By living in an innumerate society that cannot look at the risk of catastrophic but rare events in any kind of rational manner we have condemned ourselves to walking willingly into .

It smells like greed that search engines (including meta-services) don’t display an entry or symbolic icon along with automated results, to link a page which would offer the whole spectrum of possible connotations. Kinda reminds of computer generated news-selection – the one behind Yahoo/MSN/Google news e.g. helps promote the worst free articles, and so creates an artificial reality. The ridiculous fine-print in their footers will be ignored unless more visible. What people then re-post on their blogs and Wikipedia, will again be fed to the algorithms.. :’

The underlying problem in this case is an association with people who have the same name. Naming conventions that were appropriate to tell people apart in smaller groups (like medieval cities) do not work well on the scale of the internet.

Maybe parents should try to give their kids names with zero occurrences in the internet. I just checked: zero hits for my two kids 🙂

This is very much like the old “Google Bombing” trick. I remember conservatives “bombing” John Kerry’s name during his campaign for president by creating links on their sites to his site with the word “waffle”. For a short time, if you Googled for “waffle”, the first result was Kerry’s site.

The big difference here is that this new kind of “bombing” is a lot easier, as it doesn’t require a concerted effort by hundreds who all have their own websites; a few friends can simply initiate a handful of searches.

David, people do that, or try to. It’s called googlebombing. It’s one of Anon’s tactics, but other groups have been organized to do it, too. You basically plug in a particular search over and over and over on a lot of machines in a lot of places. Enough searches from enough people and the algorithm adjusts to reflect it.

Based on my own anecdotal experience, this can definitely be problematic. My last name is “Finger” and as such the first 7 or 8 hits when someone googles my name are usually adult content. I have had to explain the situation to potential employers during the interview process, who were at least conscientiousness enough to double check with me prior to eliminating me from the running. Rather annoying in my case, but certainly not as extreme as the example you gave…

Danah,

This is a great article. I think the most common algorithmic association I see in business is when you type in a company name and the term scam comes up next to the company name. For people unfamiliar with suggested search it might scare some potential clients from a company just because the term scam gets associated with a company name.

Perhaps one approach to counter negative algorithmic associations is to enter positive search inquiries? But I’m guessing that that’s not feasible nor is it a long-term solution that addresses the underlying issues of what drives users to suss out such mis/disinformation (per Henry Jenkins’ post today)…I’m curious to hear what others propose.

The algorithm is a tool ….you may as well be blaming knives for killing people instead of the people using them. It’s unfortunate, sure, but you can’t shelter everyone from the stupidity of children. Or some adults for that matter.

John, check out Rick Santorum’s “Google problem!”

Sounds like you’re angling for a promotion at Microsoft.

Why would you write about a competitor’s product in this way, as if you’re a concerned citizen, when you practically made the whole thing up, including the photoshopped “example” you admitted to creating.

Are you just trying to create a controversy or bad PR for Google?

If you REALLY wanted a solution for the supposed problem you’re proposing (which may not even be a real problem, it seems); why not spend your time trying to educate people on how to think critically; instead of uselessly fanning BS PR at Google?

The thing is, only stupid people would form their opinion of someone based solely on Google Autocomplete. But since 80% of the population are morons…

An Australian actress was involved several years ago in a “risque photo leaked” story (not even “nude photo”, if I recall correctly). For more than a year afterwards at a certain Aussie newspaper’s site, her name would come up in large type in the tag-cloud for search terms like “pornography”.

I raised the topic with a web-law specialist, who reckoned it might be worth the acteress suing.

Tha paper in question no longer uses the tag-cloud.

Did you read Eli Pariser “The filter bubble”? I think he touches on some of the same issues. His point is that Google confines us to our own individual bubbles through “personalized search”. My guess after reading it is that the result you show here will also be different for different people? If I already searched for terror related stories or knowledge the likeliness of me getting results like the ones you photoshopped above are maybe even bigger, than if I never searched anything like that?

You probably already saw this but here is a link to his talk at TED:

http://www.ted.com/talks/eli_pariser_beware_online_filter_bubbles.html

I very often plea for forgiveness in social media – and I think your post underlines that this is a human attribute that we need to work into future algorithms – forgiving mistakes and suggesting new versions of the person we are learning about!

This sounds horrible, but I wonder if what they’re seeing may be the effect of Google’s “filter bubble,” in which the results they’re seeing are dictated by past results sent to that particular browser based on a session cookie, or that particular signed-in Google account?

I wanted to follow up on my first comment because I just read your recent post “I do not speak for my employer.”

It is pretty clear that I shouldn’t have begun my comment with “Sounds like you’re angling for a promotion at Microsoft”; and of course, as an anonymous commenter out on the internet, what does it matter what I say at all?

But, I really, truly, feel like it is disingenuous (at best) to have photoshopped that image, put it right at the head of your post; and then proceed to draw conclusions based on something that, frankly, it really seems like you made up.

I really just don’t buy this “algorithmic associations” stuff. If there is a problem here, it is not Google. And if there isn’t a problem, then you appear to be creating one for no good reason.

I apologize for assuming it was a PR or a MS vs. Google thing related to your position. But I hope you realize that your position DOES give you a certain credibility, and that this post is now floating around the internet being read by the very same not-critically-thinking people that you seem to have a problem with in the first place.

I’m thinking out loud here now but I wonder what the point is. If you’re just randomly sharing your random thoughts; I think it’s unfortunate the way you’ve framed them.

Anyway that’s my 4 cents. Feel free to delete these comments as they were for you directly (not your readers).

@David: This has already been done, with little fanfare. Late into George W. Bush’s first term as POTUS, there was a unified effort to associate searches for his name with the term “miserable failure”. Not much came of it, really. And, that’s pretty high profile.

Let me be clear – this could happen on any service where algorithms learn from a population and then present the collective “intelligence” to everyone. All of the examples that I was given from actual people occurred on Google, in part because it’s so widely spread.

I’m also not blaming Google (or any other company). I’m genuinely asking the following questions:

1) What are the consequences of guilt through algorithmic association?

2) What are the correction mechanisms?

3) Who is accountable?

4) What can or should be done?

There is fundamental incorrect assumption made in this article. The auto-complete does bring the suggestions based on the previous searched terms only. The more important factor is the number of pages that are linked to each search phrase.

Why are these auto complete things, which can be quite annoying, using prior search terms instead of likely result terms? It seems to me that would be just as easy, or easier actually, from a programming and maintenance perspective, not to mention the more important issue of RELEVANCE. Jeez, this is why so much software is so poorly written — developers are so far down the rabbit-hole they can’t even see the surface anymore.

And if I ever have a son, I think I’m gonna name him

Bill or George! Anything but Mohammad ! I still hate that name!

(freely adapted from Johnny Cash)

Just a thought.

The consequences are pretty obvious in that misconstruing a person will likely affect either positively or negatively the person involved. BoingBoing just had a piece about a comic written around this concept when a college freshman is mistaken for an online sex worker.

Correction mechanisms would have to center around making the results more accurate allowing for disambiguation. Several possibilities here, topic map type one to one relationships or perhaps some faceting “did you mean the terrorist or your classmate”.

Accountability is a big problem. I don’t think the search engine can be held liable just because it has an inefficient algorithm.

“… only stupid people would form their opinion of someone based solely on Google Autocomplete.”

I read a few comments elsewhere critiquing this post for reasons similar to eyebeam’s comment above. I thought I’d jump in and mention how I see this tying in with a major argument in George Lakoff’s Don’t think of an elephant. By even presenting a ‘frame’ (in this case, ‘terrorism’ etc.) you (Google) is prompting readers to see the individual within those terms.

This also happens when you negate a frame. Perhaps the simplest example is of Nixon’s “I am not a crook” statement. Even by explicitly claiming that you are not something, you are still setting the conversation up to be considered in those terms, and prompting the audience to see you as a potential for both possibilities. You are still ‘evoking the frame’, as Lakoff puts it, and making the audience see you in relation to it. The way around this, the way to be more likely received as neutral in these terms, is to not bring up the ‘crook’ frame – or ‘terrorist’ frame – in the first place.

So, even if Google searchers are intelligent and would always question the example results in your post, it’s still not a positive thing for a person’s name to appear next to identifiers they are not comfortable with. And the more this sort of thing occurs, the worse it is for those being profiled.

Anyway, I’m not really addressing the four questions/concerns you had (they’re difficult!), but I thought I’d share a slightly different way of looking at how this is an issue in the first place.

Great post, as always =)

This would be terrifying if we lived in a world where people never hit enter and actually searched on Google. If there’s no content anywhere on the web that associates the good person with the bad thing, then the only problem comes from people who are swayed by the drop-down menu that’s always filled with retarded things. The only problem in that scenario is making sure people that stupid are never in a position of decision-making/power.

@Andrew McNicol I agree, let them be morons if so – still the consequences for the affected individual can be ugly, and difficult to justify.

Does the approach to solely provide “free” services without a choice in some circumstances involve guilt? Advertising which enables maintenance may require popular links, in order to give a familiar feeling. Without the choice to opt-out or at least partially reduce the financial burden, can there be a solution?

As far as I’ve heared (feedback to requests), pay-services often don’t cover the bills because customers likely unsubscribe, feeling to pay much in comparison to their friends. Advertising is forced to go with what people like and talk about, though not itself responsible for that. – Living in a country where people earn plentiful in sectors like education, it e.g. feels weird that “rights as a consumer” often are being taught without consideration of the already narrow calculations, just because these kind of talks win trust easily and suggest savoir vivre of the speaker.

I’d like to see a search engine with premium services one can subscribe, options how to weight general popularity compared to relevancy in more neutral blogs (based on source of backlinks and other data). National language versions of search engines meanwhile are known to be spammed worthless for software-related searches, and maybe there is a market?

Wow, photoshopping a competitor’s web results as the leader to your post didn’t feel slightly misleading? Unsubscribing.

You shouldn’t have chosen Mohammad Abdullah as an example name. That is worse than if you have used the real one. This will offend any Muslim.

I’d like to add something related, for fairness: Many Google competitors are more in the established business of boxed software, but also that relies heavily on word of mouth advertising. Which again is powered by greedy computer magazines generally trying to blend e.g. people’s fear of germs with computer viruses, or how to *always* get the cheapest price, resp. music/software piracy. That magazine-style can be at times quite racist on a subtle level, encouraging more control pressure in society (to the expense of minorities), or over the top supervision by parents. It has rarely been criticized.

The market seems to have a decades-old demand to follow predefined associations? Could a player survive without going with what the people talk about? And, to what degree does the respective company support the development of other approaches? What regards that, one has to give Google great credit for powering many nonprofit projects for free or very little, in their early stages where they can’t afford much.

Would be a nice topic to further discuss. 🙂